What is AI? Learn About Artificial Intelligence

Artificial Intelligence is the simulation of human intelligence processes by machines, especially computer systems. Specific applications of AI include expert systems, natural language processing, speech recognition, and machine vision.

AI & Developers

AI & Developers

Developers use AI to more efficiently perform tasks that are otherwise done manually, connect with customers, identify patterns, and solve problems. To get started with AI, developers should have a background in mathematics and feel comfortable with algorithms.

when getting started with using AI to build an applications, it helps to start small. By building a relatively simple project, such as Tic-Tac-Toe, for example, you’ll learn the basics of AI. Learning by doing is a great way to level up any skill, and AI is no different. Once you’ve successfully completed one or more small-scale projects, there are no limits for where AI can take you.

How AI Technology can Help Organizations

The central tenet of AI is to replicate and then exceed the way humans perceive and react to the world. It’s fast becoming the cornerstone of innovation. Powered by various forms of machine learning that recognize patterns in data to enable predictions, AI can add value to your business by.

- Providing a more comprehensive understanding of the abundance of data available.

- Relying on predictions to automate excessively complex or mundane tasks.

AI Model Training and Development

There are multiple stages in developing machine learning models, including training and inferencing. AI training and inference refers to the process of experimenting with machine learning models to solve a problem.

For example, a machine learning engineers may experiment with different candidate models for a computer vision problem, such as detecting bone fractures on X-ray images.

To improve the accuracy of these models, the engineer would feed data to the models and tune the parameters until they meet a predefined threshold. These training needs, measured by model complexity, are growing exponentially every year.

Infrastructure technologies key to AI training at scale include cluster networking, such as RDMA and InfiniBand, bare metal GPU compute, and high-performance storage.

Artificial Intelligence History

The term artificial intelligence was coined in 1956, but AI has become more popular today thanks to increased data volumes, advanced algorithms, and improvements in computing power and storage.

Early AI research in the 1950s explored topics like problem-solving and symbolic methods. In the 1960s, the US Department of Defense took an interest in this type of work and began training computers to mimic basic human reasoning. for example, the Defense Advanced Research Projects Agency (DARPA) completed street mapping projects in the 1970s. And DARPA produced intelligent personal assistants in 2003, long before Siri, Alexa or Cortana were household names.

This early work paved the way for the automation and formal reasoning that we see in computers today, including decision support systems and smart search systems that can be designed to complement and augment human abilities.

While Hollywood movies and science fiction novels depict AI as human-like robots that take over the world, the current evolution of AI technologies isn’t that scary – or quite that smart. Instead, AI has evolved to provide many specific benefits in every industry. Keep reading for modern examples of artificial intelligence in health care, retail, and more.

Why is Artificial Intelligence important?

Ai automates repetitive learning and discovery through data Instead of automating manual tasks. AI performs frequent, high-volume, computerized tasks. And it does so reliably and without fatigue. Of course, humans are still essential to set up the system and ask the right questions.

AI adds intelligence to existing products. Many products you already use will be improved with AI capabilities, much like Siri was added as a feature to a new generation of Apple products. Automation, conversational platforms, boat, and smart machines can be combined with large amounts of data to improve many technologies. Upgrades at home and in the workplace, range from security intelligence and smart cams to investment analysis.

AI adapts through progressive learning algorithms to let the data do the programming. AI finds structure and regularities in data so that algorithms can acquire skills. Just as an algorithm can teach itself to play chess, it can teach itself what product to recommend next online. And the models adapt when given new data.

AI analyzes more and deeper data using neural networks that have many hidden layers. Building a fraud detection system with five hidden layers used to be impossible. All that has changed with incredible computer power and big data. You need lots of data to train deep learning models because they learn directly from the data.

AI achieves incredible accuracy through deep neural networks. For example, your interactions with Alexa and Google are all based on deep learning. And these products keep getting more accurate the more you use them. In the medical field, AI techniques from deep learning and object recognition can now be used to pinpoint cancer on medical images with improved accuracy.

AI gets the most out of data when algorithms are self-learning, the data itself is an asset. The answer are in the data. You just have to apply AI to find them. Since the role of the data is now more important than ever, it can create a competitive advantage. If you have the best data in a competitive industry, even if everyone is applying similar techniques, the best data will win.

Types of Artificial Intelligence

Types of Artificial Intelligence

Artificial intelligence can be organized in several ways, depending on stages of development or actions begin performed.

For instance, four stages of AI development are commonly recognized.

- Reactive machines: Limited AI that only reacts to different kinds of stimuli based on preprogrammed rules. Does not use memory and thus cannot learn with new data. IBM’s Deep Blue that beat chess champion Garry Kasparov in 1997 was an example of a reactive machine.

- Limited memory: Most modern AI is considered to be limited memory. It can use memory to improve over time by being trined with new data, typically through an artificial neural network or other training model. Deep learning, a subset of machine learning, is considered limited memory artificial intelligence.

- Theory of mind: Theory of mind AI does not currently exist, but research is ongoing into its possibilities. It describes AI that can emulate the human mind and has decision-making capabilities equal to that of a human, including recognizing and remembering emotions and reacting in social situations as a human would.

- Self-aware: A step above theory of mind AI, self-aware AI describes a mythical machine that is aware of its own existence and has the intellectual and emotional capabilities of a human. Like theory of mind AI, self-aware AI does not currently exist.

A more useful way of broadly categorizing types of artificial intelligence is by what the machine can do. All of what we currently call artificial intelligence is considered artificial “narrow” intelligence, in that it can perform only narrow sets of actions based on its programming and training. For instance, an AI algorithm that is used for object classification won’t be able to perform natural language processing. Google Search is a form of narrow AI, as is predictive analytics, or virtual assistants.

Artificial general intelligence (AGI) would be the ability for a machine to “sense, think, and act” just like a human. AGI does not currently exist. The next level would be artificial superintelligence (ASI), in which the machine would be able to function in all the ways superior to a human.

How Artificial Intelligence Works?

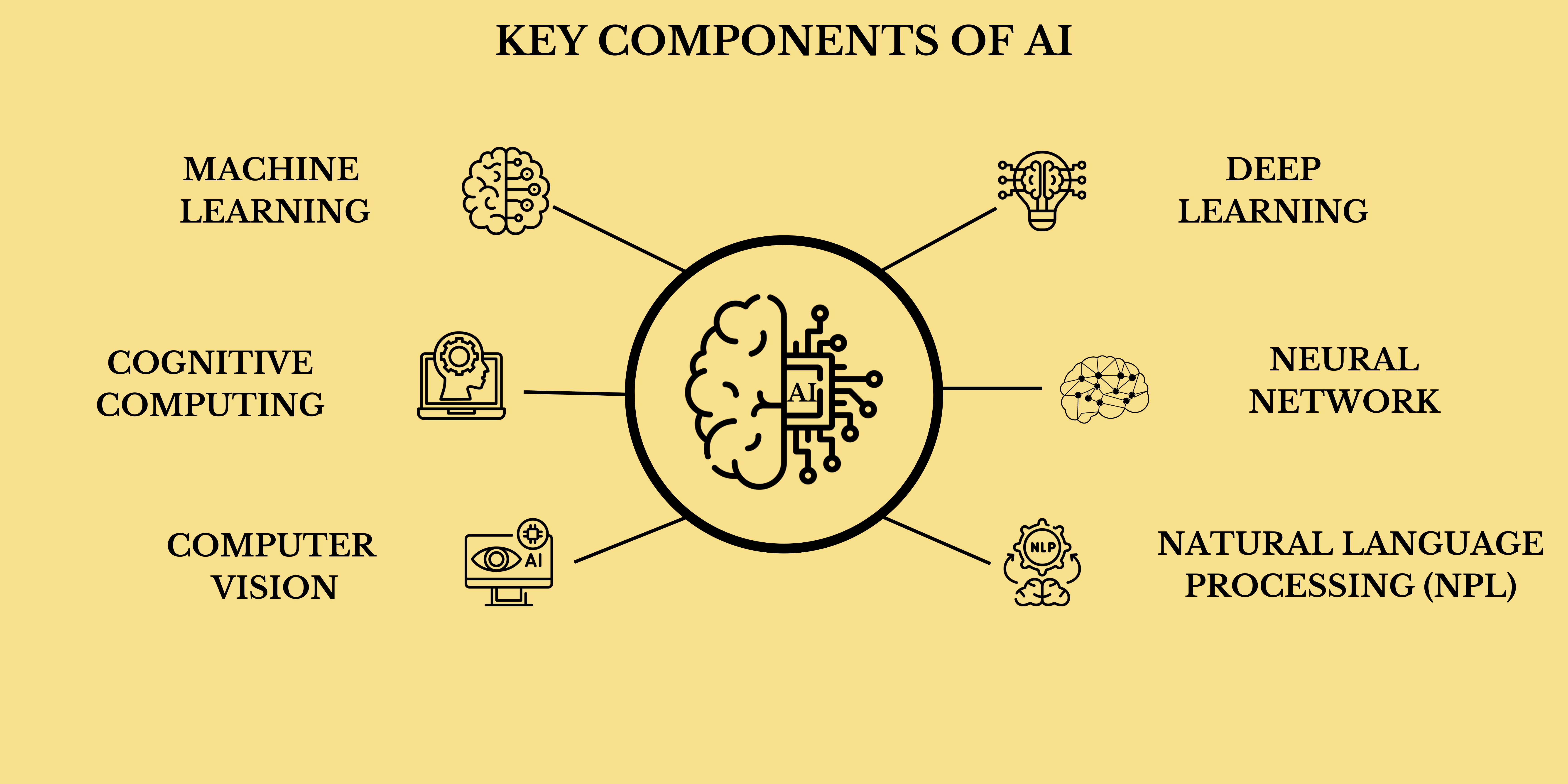

Ai works by combining large amounts of data with fast, iterative processing and intelligent algorithms, allowing the software to learn automatically from patterns or features in the data. AI is a broad field of study that includes many theories, methods, and technologies, as well as the following major subfields:

Additionally, several technologies enable and support AI:

Computer Vision relies on pattern recognition and deep learning to recognize what’s in a picture or video. When machines can process, analyze and understand images, they can capture images or videos in real time and interpret their surroundings.

Natural Language Processing (NPL) is the ability of computers to analyze, understand and generate human language, including speech. The next stage of NPL is natural language interaction, which allows humans to communicate with computers using normal, everyday language to perform tasks.

Graphical Processing Units are key to AI because they provide the heavy compute power that’s required for iterative processing. Training neural networks require big data plus compute power.

The internet of Things generates massive amount of data from connected devices, most of it unanalyzed. Automating models with AI will allow us to use more of it.

Advanced Algorithms are being developed and combined in new ways to analyze more data faster and at multiple levels. This intelligent processing is key to identifying and predicting rare events, understanding complex systems and optimizing unique scenarios.

APIs, or Application Programming Interfaces, are portable packages of code that make it possible to add AI functionality to existing products and software package. They can add image recognition capabilities to home security systems and Q&A capabilities that describe data, create captions and headlines, or call out interesting patterns and insights in data.

In summary, the goal of AI is to provide software that can reason on input and explain on output. AI will provide human-like interactions with software and offer decision support for specific tasks, but it’s not a replacement for humans – and won’t be anytime soon.

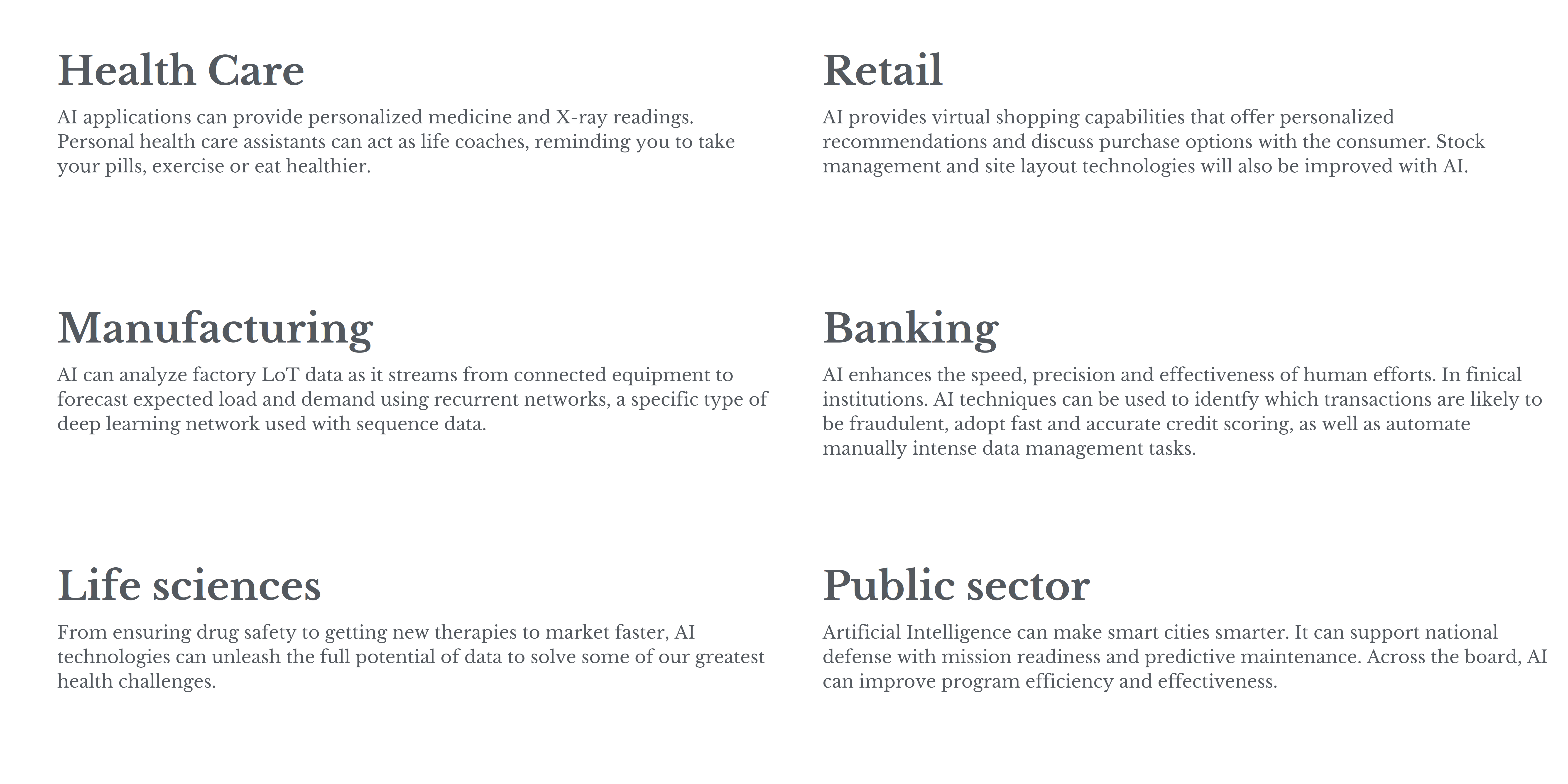

How Artificial Intelligence Is Begin Used?

Every industry has a high demand for AI capabilities – including systems that can be used for automation, learning, legal assistance, risk notification, and research. Specific uses of AI in Industry include:

Benefits of AI

Benefits of AI

Artificial Intelligence Training Models

When businesses talk about AI, they often talk about “training data”. But what does mean? Remember that limited-memory artificial intelligence is AI that improves over time by being trained with new data. Machine learning is a subset of artificial intelligence that uses algorithms to train data to obtain results.

In board strokes, three kinds of learnings model are often used in machine learning;

Supervised learning is a machine learning model that maps a specific input to an output using labeled training data (structured data). In simple terms, to train the algorithm to recognize pictures of cats, feed it pictures labeled as cats.

Unsupervised learning is a machine learning model that learns patterns based on unlabeled data ( unstructured data). Unlike supervised learning, the end result is not known ahead of time. Rather, the algorithm learns from the data, categorizing it into groups based on attributes. For instance, unsupervised learning is good at pattern matching and descriptive modeling.

In addition to supervised and unsupervised learning, a mixed approach called semi-supervised learning is often employed, where only some of the data is labeled. In semi-supervised learning, an end result is known, but the algorithm must figure out how to organize and structure the data to achieve the desired results.

Reinforcement learning is a machine learning model that can be broadly described as ” learn by doing.” An ” agent” learns to perform a defined task by trail and error (a feedback loop) until its performance is within a desirable range. The agent receives positive reinforcement when it performs the task well and negative reinforcement when it performs poorly. An example of reinforcement learning would be teaching a robotic hand to pick up a ball.

Strong AI vs Weak AI

Ai can be categorized as weak or strong.

- Weak AI, also known as narrow AI, is designed and trained to complete a specific task. Industrial robots and virtual personal assistants, such as Apple’s Siri, use weak AI.

- Strong AI, also known as artificial general intelligence (AGI), describes programming that can replicate the cognitive abilities of the human brain> When presented with an unfamiliar task, a strong AI system can use fuzzy logic to apply knowledge from one domain to another and find a solution autonomously. In, theory, a strong AI program should be able to pass both a Turing test and the Chinese Room argument.

Working together with AI

Working together with AI

Artificial intelligence is not here to replace us. It augments our abilities and makes us better at what we do. Because AI algorithms learn differently than humans, they look at things differently. They can see relationships and patterns that escape us. This human, AI partnership offers many opportunities. It can:

- Bring analytics to industries and domains where it’s currently underutilized.

- Improve the performance of existing analytic technologies, like computer vision and time series analysis.

- Break down economic barriers, including language and translation barriers.

- Augment existing abilities and make us better at what we do.

- Give us better vision, better understanding, better memory and much more.

The principle limitation of AI is that it learns from the data. There is no other way in which knowledge can be incorporated. That means any inaccuracies in the data will be reflected in the results. And any additional layers of prediction or analysis have to be added separately.

Today’s AI systems are trained to do a clearly defined task. The system that plays poker cannot play solitaire or chess. The system that detects fraud cannot drive a car or give you legal advice.

In other words, these systems are very, very specialized. They are focused on a single task and are far from behaving like humans.

Fundamentals of Artificial Intelligence

Artificial intelligence (AI) is a field of computer science that focuses on creating intelligent machines that can perform tasks that would typically require human intelligence, such as learning, problem-solving, decision-making, and language understanding. The fundamentals of AI are based on three main components:

Data: AI algorithms require large amounts of data to learn from and make predictions or decisions. The quality and quantity of data are critical to the accuracy and effectiveness of AI systems.

Algorithms: AI algorithms are sets of rules and procedures that enable machines to process and analyze data, make predictions or decisions, and improve their performance over time through machine learning.

Computing Power: AI systems require significant computing power to process large amounts of data and perform complex tasks. Advancements in hardware, such as GPUs and TPUs, have greatly improved the speed and efficiency of AI computations.

There are several subfields of AI, including machine learning, natural language processing, computer vision, robotics, and expert systems. Each of these subfields focuses on specific applications and techniques for building intelligent machines.

Overall, the fundamentals of AI are based on data, algorithms, and computing power, and the field continues to advance rapidly, with new applications and techniques emerging all the time. AI has the potential to transform many industries and improve our daily lives in countless ways.

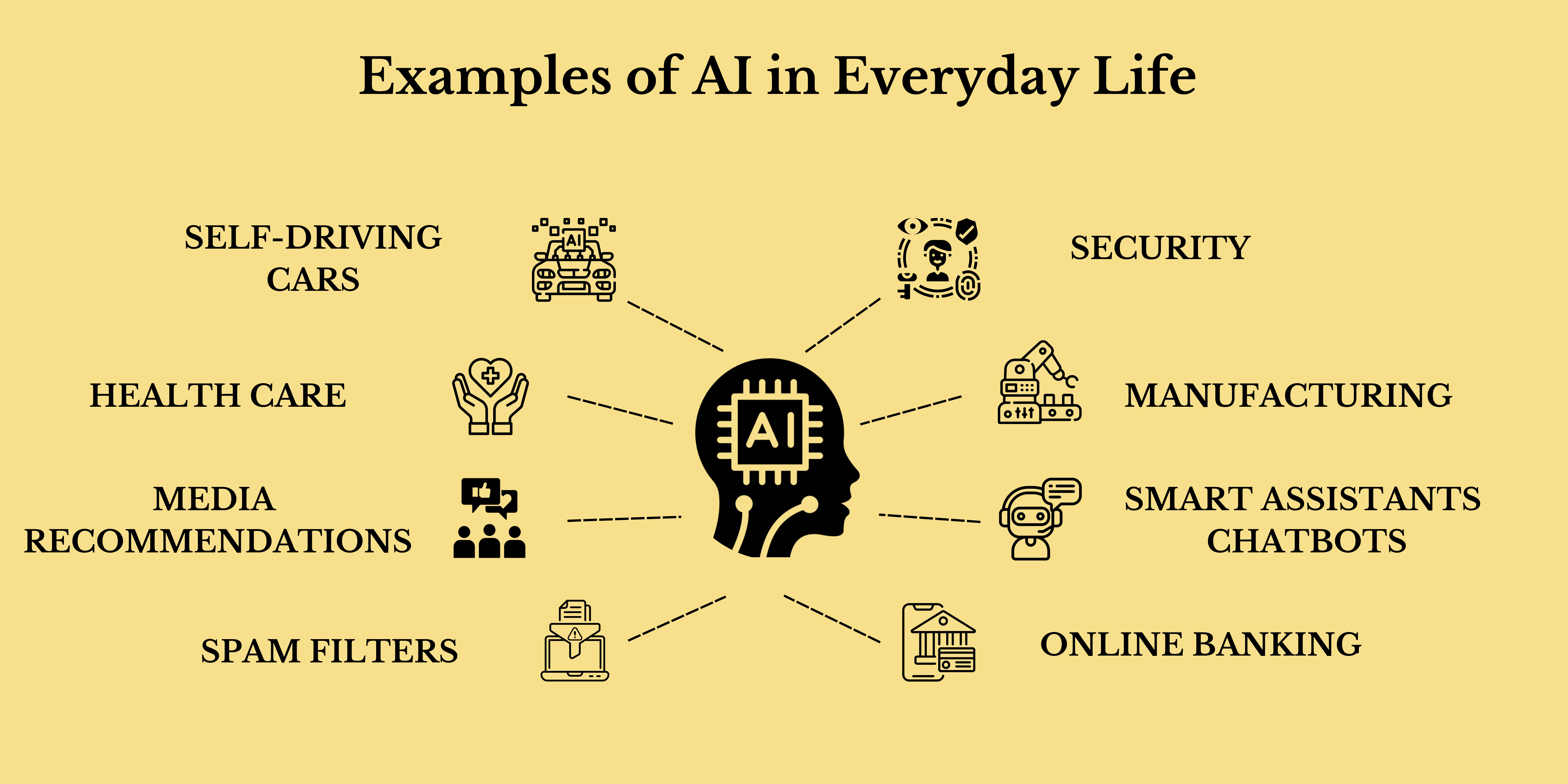

What are examples of AI technology and how is it used today?

There are many examples of AI technology being used today across a wide range of industries and applications. Here are some examples:

Personalized marketing: AI is being used to analyze large amounts of customer data and create personalized marketing campaigns that are tailored to individual preferences and behaviors.

Virtual assistants: AI-powered virtual assistants like Siri, Alexa, and Google Assistant can understand natural language commands and provide information, answer questions, and perform tasks on behalf of users.

Fraud detection: AI is being used to detect fraudulent activities, such as credit card fraud, by analyzing patterns and anomalies in data.

Predictive maintenance: AI can be used to predict when equipment is likely to fail, allowing for proactive maintenance and reducing downtime.

Image recognition: AI-powered image recognition technology is being used in a range of applications, from identifying faces in photos to detecting tumors in medical imaging.

Autonomous vehicles: AI is a key component of autonomous vehicles, allowing them to perceive their environment and make decisions in real-time.

Chatbots: AI-powered chatbots are being used to provide customer support and answer frequently asked questions on websites and social media.

Recommendation engines: AI-powered recommendation engines are used by companies like Netflix and Amazon to suggest products and content to users based on their past behavior and preferences.

These are just a few examples of how AI is being used today, and the list continues to grow as new applications and technologies are developed.

AI tools and services

AI tools and services

There are many AI tools and services available today that businesses and individuals can use to leverage the power of artificial intelligence. Here are some examples:

Natural language processing (NLP) tools: NLP tools can be used to analyze and understand human language, including sentiment analysis, speech recognition, and language translation.

Machine learning platforms: Machine learning platforms provide tools and infrastructure for building and deploying machine learning models, including Google Cloud Machine Learning, Amazon SageMaker, and Microsoft Azure Machine Learning.

Chatbot platforms: Chatbot platforms enable businesses to create and deploy chatbots to interact with customers, including Dialogflow, Microsoft Bot Framework, and IBM Watson Assistant.

Computer vision services: Computer vision services can be used to analyze and interpret visual data, including image and video recognition, object detection, and facial recognition. Examples include Amazon Rekognition and Google Cloud Vision.

AI-powered analytics: AI-powered analytics tools can be used to extract insights from large amounts of data, including predictive analytics, anomaly detection, and clustering. Examples include IBM Cognos Analytics and Salesforce Einstein Analytics.

Voice assistants: Voice assistants, like Amazon Alexa and Google Home, use AI to understand and respond to natural language voice commands.

Autonomous platforms: Autonomous platforms, like self-driving cars, drones, and robots, use AI to make real-time decisions based on their environment and data inputs.

These are just a few examples of the many AI tools and services available today. As AI technology continues to evolve, we can expect to see even more innovative applications and tools emerge in the near future.

What are the Advantages and Disadvantages of Artificial Intelligence?

Artificial neural networks and deep learning AI technologies are quickly evolving, primarily because AI can process large amounts of data much faster and make predictions more accurately than humanly possible.

Advantages of AI

the following are some advantages of AI:

Efficiency and speed: AI can process vast amounts of data and perform complex tasks much faster than humans, resulting in increased efficiency and productivity.

Accuracy and consistency: AI can make decisions based on objective data without bias or human error, resulting in greater accuracy and consistency.

Cost savings: AI can automate repetitive tasks, reducing labor costs and increasing cost savings.

Improved customer experience: AI-powered chatbots and virtual assistants can provide 24/7 customer support, resulting in improved customer satisfaction.

Predictive analytics: AI can analyze large amounts of data and provide insights and predictions that can help businesses make better decisions and improve outcomes.

Disadvantages of AI

The following are some disadvantages of AI:

Job displacement: AI can replace jobs that were previously done by humans, leading to job displacement and potentially exacerbating economic inequality.

Bias and ethical concerns: AI systems can perpetuate biases and discriminate against certain groups, and there are ethical concerns around the use of AI in areas like surveillance and warfare.

Dependence on technology: Over-reliance on AI technology can lead to a loss of skills and expertise, as well as increased vulnerability to technology failures and cyberattacks.

Lack of creativity and intuition: AI lacks the creativity and intuition of human decision-making, which can limit its effectiveness in certain areas.

Unforeseen consequences: AI systems can have unintended consequences or be used in unintended ways, leading to potentially harmful outcomes.

Frequently Asked Questions

The power of AI depends on various factors such as the type of AI technology being used, the amount and quality of data available, and the sophistication of the algorithms being employed. Some AI systems are relatively simple and straightforward, while others are incredibly complex and can process vast amounts of data in real-time.

Artificial Intelligence in the natural world has a wide variety of applications. These include your journey from the start of the day till the end of the day. When you usually start your day with your smartphone, you make use of the AI capabilities of smart face lock or other fingerprint AI measures to unlock your phone.

Then you decide to google something, you are greeted with AI features of autocomplete and autocorrect, which utilizes technologies of sequence to sequence modeling. Apart from smartphones, Artificial Intelligence has tons of other applications, including email spam detection, chatbots, object character recognition, and so much more.

Alright! So by this point, you are hopefully fascinated by the various features of Artificial Intelligence, and you are excited to look for a great place to get started with Artificial Intelligence.

Artificial Intelligence is a vast and humungous field. But, don’t worry! There are tons of valuable resources and productive material that you can utilize to produce the best possible results. You can gain a wide arena of knowledge just by analyzing and studying the materials on the internet.

Websites like Stack overflow, Data stack exchange, and GitHub, are some of the most popular sites to receive in-depth solutions and answers to the problems or errors that you are encountering with the running or installation of your program or the respective code blocks.

No, AI cannot take over the world. While AI has the potential to be incredibly powerful and transformative, it is ultimately a tool that is created and controlled by humans. AI systems are designed to perform specific tasks and are only as powerful as the data they’re trained on and the algorithms they use.

It’s also important to note that AI systems are not capable of independent action or decision-making. They operate within predefined boundaries and are only able to make decisions based on the data and instructions they’ve been given. In other words, AI is not capable of acting outside of its programming or making decisions based on emotions, desires, or intentions.

That being said, it’s important to consider the potential risks and drawbacks of AI technology, including the possibility of unintended consequences, bias, and misuse. As with any powerful technology, it’s important to carefully consider the ethical and societal implications of AI and take steps to mitigate potential risks and drawbacks.

AI has the potential to automate certain tasks and make some jobs obsolete, but it’s important to remember that AI is also creating new jobs and transforming the way we work.

While AI can be used to automate repetitive and routine tasks, it’s not able to replace human creativity, judgment, and decision-making. AI systems are designed to augment and support human work, not replace it entirely.

Instead of taking jobs away, AI is creating new opportunities for workers to develop new skills and specialize in areas where human expertise is still required. For example, AI can be used to automate routine tasks in healthcare, freeing up healthcare workers to focus on more complex and challenging cases.

In order to take full advantage of the opportunities offered by AI, it’s important for workers to adapt and develop new skills that complement and enhance the capabilities of AI. This includes skills like data analysis, programming, and problem-solving, which are in high demand in many industries. By developing these skills, workers can position themselves for success in the AI-powered economy of the future.

No, you don’t need to be a genius to start learning AI. While AI can be a complex and technical field, it’s also an area that is accessible to anyone who is willing to put in the time and effort to learn.

There are many resources available for beginners who want to learn AI, including online courses, tutorials, and books. These resources can provide a solid foundation in the fundamental concepts and technologies of AI, and can help beginners develop the skills and knowledge they need to start building their own AI applications.

It’s also important to remember that AI is a rapidly evolving field, and there are always new developments and innovations to learn about. By staying curious and continuously learning, anyone can develop their skills and expertise in AI, regardless of their background or level of experience.

Conclusion

Artificial Intelligence is undoubtedly a trending and emerging technology. It is growing very fast day by day, and it is enabling machines to mimic the human brain. Due to its high performance and as it is making human life easier, it is becoming a highly demanded technology among industries. However, there are also some challenges and problems with AI. Many people around the world are still thinking of it as a risky technology, because they feel that if it overtakes humans, it will be dangerous for humanity, as shown in various sci-fi movies. However, the day-to-day development of AI is making it a comfortable technology, and people are connecting with it more. Therefore, we can conclude that it is a great technology, but each technique must be used in a limited way in order to be used effectively, without any harm.